Hadoop Installation On Windows Tutorial

In this Datasets for Hadoop Practice tutorial, I am going to share few free Hadoop data sources available for use. You can download these and start practicing Hadoop easily. I have compiled the list of datasets available and have shortlisted around 10 datasets for Hadoop practice. Working on these datasets will give you the real example and experience of Hadoop and its ecosystems.

So this tutorial aims to provide a step by step guide to Build Hadoop binary distribution from Hadoop source code on Windows OS. This article will also provide instructions to setup Java, Maven, and other required components. Apache Hadoop is an open source Java project, mainly used for distributed.

Top Hadoop Datasets for Practice Here is the list of Free Hadoop Datasets for practice- 1.: It provides a quarterly full data set of stack exchange. Anr Oka Laila Kosam Old Mp3 Song Free Download more. Around 10 GB of data, you can get from here and is an ideal location for Hadoop dataset for practice. 2.: A great collection of datasets for Hadoop practice is grouplens.org. Check the site and download the available data for live examples. 3.: It’s no secret that Amazon is among market leaders when it comes to cloud.

AWS is being used on a large scale with Hadoop. Also, Amazon provides a lot of datasets for Hadoop practice.

You can download these. 4.: This University provides a quality data set for machine learning.

5.: 1 billion web pages collected between Jan and Feb 09. 5TB Compressed. Wikipedia also provides datasets for Hadoop practice. You will have refreshed and real data to the use.

7.: You will find a huge collection of 180+ datasets here 8.: You may find almost all categories of datasets here. 9.: Here AWS officially provides datasets for example 10.: List of a large number of free datasets for practice. These were the list of datasets for Hadoop practice. Just use these datasets for Hadoop projects and practice with a large chunk of data. These are free datasets for Hadoop and all you have to do is, just download big data sets and start practicing. Also, if you have Hadoop installed in your PC, you can also find the Hadoop Datasets in the below locations- • hadoop jar /usr/lib/hadoop-0.20-mapreduce/hadoop-examples.jar randomwriter /random-data: generates 10 GB data per node under folder /random-data in HDFS.

• hadoop jar /usr/lib/hadoop-0.20-mapreduce/hadoop-examples.jar randomtextwriter /random-text-data: generates 10 GB textual data per node under folder /random-text-data in HDFS. Which dataset do you use for Hadoop practice?

What is Hadoop? Is a an open source project written in Java and designed to provide users with two things: a distributed file system (HDFS) and a method for distributed computation. It’s based on Google’s published and concept which discuss how to build a framework capable of executing intensive computations across tons of computers.

Something that might, you know, be helpful in building a giant search index. Read the and for more information and background on Hadoop. What’s the big deal about running it on Windows?

Looking for Linux? If you’re looking for a comprehensive guide to getting Hadoop running on Linux, please check out Michael Noll’s excellent guides: and. This post was inspired by these very informative articles. Hadoop’s key design goal is to provide storage and computation on lots of homogenous “commodity” machines; usually a fairly beefy machine running Linux.

With that goal in mind, the Hadoop team has logically focused on Linux platforms in their development and documentation. Their even includes the caveat that “Win32 is supported as a development platform. Distributed operation has not been well tested on Win32, so this is not a production platform.” If you want to use Windows to run Hadoop in pseudo-distributed or distributed mode (more on these modes in a moment), you’re pretty much left on your own. Now, most people will still probably not run Hadoop in production on Windows machines, but the ability to deploy on the most widely used platform in the world is still probably a good idea for allowing Hadoop to be used by many of the developers out there that use Windows on a daily basis. Caveat Emptor I’m one of the few that has invested the time to setup an actual distributed Hadoop installation on Windows. I’ve used it for some successful development tests.

I have not used this in production. Also, although I can get around in a Linux/Unix environment, I’m no expert so some of the advice below may not be the correct way to configure things. I’m also no security expert. If any of you out there have corrections or advice for me, please let me know in a comment and I’ll get it fixed. This guide uses and assumes that you don’t have any previous Hadoop installation. I’ve also done my primary work with Hadoop on Windows XP.

Where I’m aware of differences between XP and Vista, I’ve tried to note them. Please comment if something I’ve written is not appropriate for Vista. Bottom line: your mileage may vary, but this guide should get you started running Hadoop on Windows. A quick note on distributed Hadoop Hadoop runs in one of three modes: • Standalone: All Hadoop functionality runs in one Java process.

This works “out of the box” and is trivial to use on any platform, Windows included. • Pseudo-Distributed: Hadoop functionality all runs on the local machine but the various components will run as separate processes. This is much more like “real” Hadoop and does require some configuration as well as SSH. It does not, however, permit distributed storage or processing across multiple machines. • Fully Distributed: Hadoop functionality is distributed across a “cluster” of machines.

Each machine participates in somewhat different (and occasionally overlapping) roles. This allows multiple machines to contribute processing power and storage to the cluster. The can get you started on Standalone mode and Psuedo-Distributed (to some degree). Take a look at that if you’re not ready for Fully Distributed. This guide focuses on the Fully Distributed mode of Hadoop.

After all, it’s the most interesting where you’re actually doing real distributed computing. Pre-Requisites Java I’m assuming if you’re interested in running Hadoop that you’re familiar with Java programming and have Java installed on all the machines on which you want to run Hadoop.

The Hadoop docs recommend Java 6 and require at least Java 5. Whichever you choose, you need to make sure that you have the same major Java version (5 or 6) installed on each machine. Also, any code you write for running using Hadoop’s MapReduce must be compiled with the version you choose. If you don’t have Java installed, go get it from Sun and install it. I will assume you’re using Java 6 in the rest of this guide.

Cygwin As I said in the introduction, Hadoop assumes Linux (or a Unix flavor OS) is being used to run Hadoop. This assumption is buried pretty deeply. Various parts of Hadoop are executed using shell scripts that will only work on a Linux shell. It also uses passwordless secure shell (SSH) to communicate between computers in the Hadoop cluster. The best way to do these things on Windows is to make Windows act more like Linux.

You can do this using, which provides a “Linux-like environment for Windows” that allows you to use Linux-style command line utilities as well as run really useful Linux-centric software like OpenSSH. Don’t install it yet. I’ll describe how you need to install it below.

I’m writing this guide for version 0.17 and I will assume that’s what you’re using. More than one Windows PC on a LAN It should probably go without saying that to follow this guide, you’ll need to have more than one PC. I’m going to assume you have two computers and that they’re both on your LAN. Go ahead and designate one to be the Master and one to be the Slave. These machines together will be your “cluster”. The Master will be responsible for ensuring the Slaves have work to do (such as storing data or running MapReduce jobs). The Master can also do its share of this work as well.

If you have more than two PCs, you can always setup Slave2, Slave3 and so on. Some of the steps below will need to be performed on all your cluster machines, some on just Master or Slaves. I’ll note which apply for each step. Step 1: Configure your hosts file (All machines) This step isn’t strictly necessary but it will make your life easier down the road if your computers change IPs. It’ll also help you keep things straight in your head as you edit configuration files.

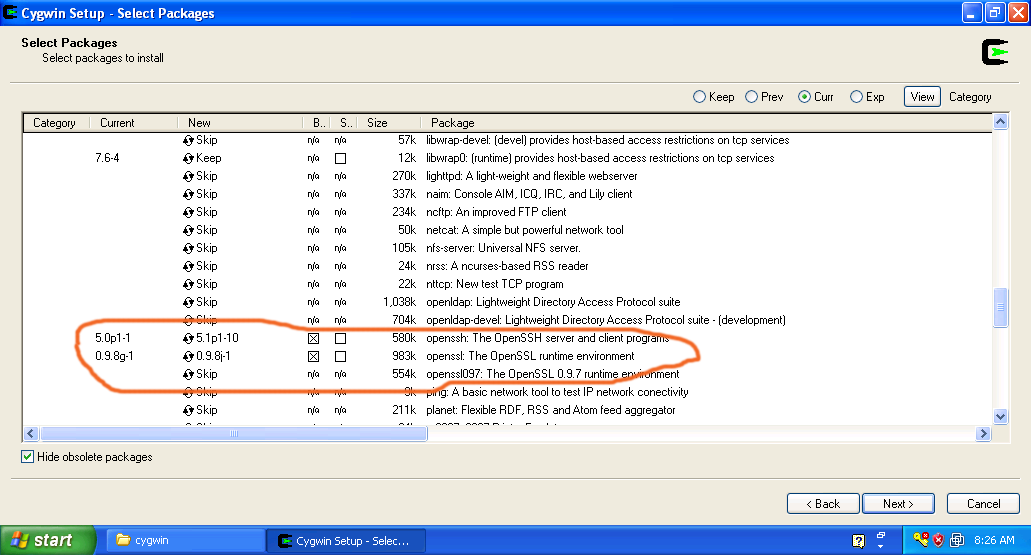

Open your Windows hosts file located at c: windows system32 drivers etc hosts (the file is named “hosts” with no extension) in a text editor and add the following lines (replacing the NNNs with the IP addresses of both master and slave): master NNN.NNN.NNN.NNN slave NNN.NNN.NNN.NNN Save the file. Step 2: Install Cygwin and Configure OpenSSH sshd (All machines) Cygwin has a bit of an odd installation process because it lets you pick and choose which libraries of useful Linux-y programs and utilities you want to install. In this case, we’re really installing Cygwin to be able to run shell scripts and. OpenSSH is an implementation of a server (sshd) and client (ssh). If you’re not familiar with SSH, you can think of it as a secure version of telnet. With the ssh command, you can login to another computer running sshd and work with it from the command line. Instead of reinventing the wheel, I’m going to tell you to go.

You can stop after instruction 6. Like the linked instructions, I’ll assume you’ve installed Cygwin to c: cygwin though you can install it elsewhere. If you’re running a firewall on your machine, you’ll need to make sure port 22 is open for incoming SSH connections. As always with firewalls, open your machine up as little as possible. If you’re using Windows firewall, make sure the open port is scoped to your LAN. Microsoft has for how to do all this with Windows Firewall (scroll down to the section titled “Configure Exceptions for Ports”).

Step 3: Configure SSH (All Machines) Hadoop uses SSH to allow the master computer(s) in a cluster to start and stop processes on the slave computers. One of the nice things about SSH is it supports several modes of secure authentication: you can use passwords or you can use public/private keys to connect without passwords (“passwordless”). Hadoop requires that you setup SSH to do the latter. I’m not going to go into great detail on how this all works, but suffice it to say that you’re going to do the following: • Generate a public-private key pair for your user on each cluster machine.

• Exchange each machine user’s public key with each other machine user in the cluster. Generate public/private key pairs To generate a key pair, open Cygwin and issue the following commands ( $>is the command prompt): $>ssh-keygen -t dsa -P ' -f ~/.ssh/id_dsa $>cat ~/.ssh/id_dsa.pub >>~/.ssh/authorized_keys Now, you should be able to SSH into your local machine using the following command: $>ssh localhost When prompted for your password, enter it. You’ll see something like the following in your Cygwin terminal. Hayes@localhost's password: Last login: Sun Jun 8 19: from localhost hayes@calculon ~ $>To quit the SSH session and go back to your regular terminal, use: $>exit Make sure to do this on all computers in your cluster. Exchange public keys Now that you have public and private key pairs on each machine in your cluster, you need to share your public keys around to permit passwordless login from one machine to the other. Once a machine has a public key, it can safely authenticate a request from a remote machine that is encrypted using the private key that matches that public key.

On the master issue the following command in cygwin (where “” is the username you use to login to Windows on the slave computer): $>scp ~/.ssh/id_dsa.pub @slave:~/.ssh/master-key.pub Enter your password when prompted. This will copy your public key file in use on the master to the slave. On the slave, issue the following command in cygwin: $>cat ~/.ssh/master-key.pub >>~/.ssh/authorized_keys This will append your public key to the set of authorized keys the slave accepts for authentication purposes. Back on the master, test this out by issuing the following command in cygwin: $>ssh @slave If all is well, you should be logged into the slave computer with no password required.

Repeat this process in reverse, copying the slave’s public key to the master. Also, make sure to exchange public keys between the master and any other slaves that may be in your cluster. Configure SSH to use default usernames (optional) If all of your cluster machines are using the same username, you can safely skip this step. If not, read on. Most Hadoop tutorials suggest that you setup a user specific to Hadoop. If you want to do that, you certainly can. Why setup a specific user for Hadoop?

Well, in addition to being more secure from a file permissions and security perspective, when Hadoop uses SSH to issue commands from one machine to another it will automatically try to login to the remote machine using the same user as the current machine. If you have different users on different machines, the SSH login performed by Hadoop will fail.

However, most of us on Windows typically use our machines with a single user and would probably prefer not to have to setup a new user on each machine just for Hadoop. The way to allow Hadoop to work with multiple users is by configuring SSH to automatically select the appropriate user when Hadoop issues its SSH command. (You’ll also need to edit the hadoop-env.sh config file, but that comes later in this guide.) You can do this by editing the file named “config” (no extension) located in the same “.ssh” directory where you stored your public and private keys for authentication. Cygwin stores this directory under “c: cygwin home.ssh”. On the master, create a file called config and add the following lines (replacing “” with the username you’re using on the Slave machine: Host slave User If you have more slaves in your cluster, add Host and User lines for those as well. On each slave, create a file called config and add the following lines (replacing “” with the username you’re using on the Master machine: Host master User Now test this out. On the master, go to cygwin and issue the following command: $>ssh slave You should be automatically logged into the slave machine with no username and no password required.

Make sure to exit out of your ssh session. For more information on this configuration file’s format and what it does, go or run man ssh_config in cygwin. Step 4: Extract Hadoop (All Machines) If you haven’t downloaded Hadoop 0.17, go do that now. The file will have a “.tar.gz” extension which is not natively understood by Windows.

You’ll need something like WinRAR to extract it. (If anyone knows something easier than WinRAR for extracting tarred-gzipped files on Windows, please leave a comment.) Once you’ve got an extraction utility, extract it directly into c: cygwin usr local. (Assuming you installed Cygwin to c: cygwin as described above.) The extracted folder will be named hadoop-0.17.0. Rename it to hadoop. All further steps assume you’re in this hadoop directory and will use relative paths for configuration files and shell scripts.

Step 5: Configure hadoop-env.sh (All Machines) The conf/hadoop-env.sh file is a shell script that sets up various environment variables that Hadoop needs to run. Open conf/hadoop-env.sh in a text editor.

Look for the line that starts with “#export JAVA_HOME”. Aplikasi Stok Barang Gratis. Change that line to something like the following: export JAVA_HOME=c: Program Files Java jdk1.6.0_06 This should be the home directory of your Java installation. Note that you need to remove the leading “#” (comment) symbol and that you need to escape both backslashes and spaces with a backslash. Next, locate the line that starts with “#export HADOOP_IDENT_STRING”. Change it to something like the following: export HADOOP_IDENT_STRING=MYHADOOP Where MYHADOOP can be anything you want to identify your Hadoop cluster with. Just make sure each machine in your cluster uses the same value. Thanks for your tutorial – it was a great help for me.

I set up a mixed Linux/Win Hadoop cluster and everything is working fine. I have a SSH related question and maybe you can answer it. Is it really necessary that each node in the cluster has the pubulic RSA key of all the other nodes added to it’s authorized keys? I just added the master’s public RSA kay to all the slave nodes and everything is working. But working doesn’t imply that things are running as fast as they could.

Maybe there would be a speed gain if the slave nodes could login to each other with SSH. Cheers Johannes.